Introduction to Docker Fundamentals

Professionals see docker as an uncontainable trend that is growing exponentially among organizations, which is why you can also find docker certification courses available for docker fundamentals. We must go through a quick knowledge of what is docker and its benefits to get a basic idea about it.

Docker has gained immense popularity in the IT industry because of the extent of application and usage flexibility it can offer. It is a tool that is commonly useful for both developers and system administrators collectively. You can easily prepare and build your application, containerize them using docker based on their dependencies into containers, ship to be executed, and run into other machines, making working easier.

Docker and containerization are seen as an improvement or to be more precise, an extended version of Virtualization. A virtual machine can be used for executing the same task. Still, it doesn’t seem to be as efficient as docker is. So, let’s dive deep to understand the docker fundamentals.

What is Docker?

Docker is a developer tool to assist developers and system admins in deploying applications in containers to effectively run them in host operating systems like Linux. It’s an overall platform used to develop, ship, and run applications.

It is a significant tool to separate your applications from the applications software structure to deliver the software quickly. The docker’s infrastructure can be managed just like software management, and the interface is also easy. Containerization is another popular term tagged with Docker. Containerization is the term coined for using containers to deploy applications in a Linux platform. It was nascent in the tech world in the year 2013, and from then it is one stable content for modern developments in technology.

Uses of Docker are:

- It helps to use the tooling to create and use containers for developing your required applications.

- The container becomes an entire unit that can be used for distributing and testing the developed applications.

- The system can use deployed and tested locally, on cloud services, or even tested on a hybrid mix of the two.

Understanding the Docker Architecture

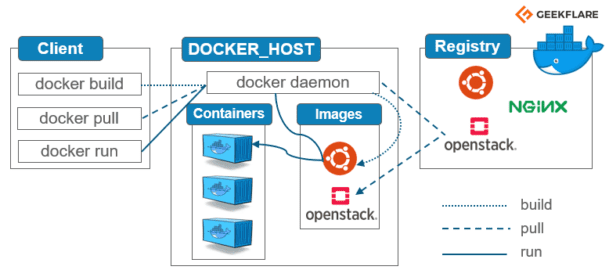

Docker architecture follows the client-server model. The Docker client communicates with the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. Docker architecture has several components like:

- Docker Client (CLI): a facility used to trigger docker commands

- Docker Host: command for running docker daemon

- Dockery Registry: also termed as docker hub is a registry for storing docker images

A docker daemon, when allowed to execute with a docker host, is responsible for the formation and running of images and containers. You need to know the following points to understand how docker architecture works.

- A build command that is issued from a suitable client will trigger the docker daemon to build a Docker image. The docker daemon runs on the docker host which runs the images based on the inputs given by the clients. Once the image is built it is saved in the registry of a docker hub and can be retrieved when required. The docker hub can also be a local repository or cloud-based storage.

- If you do not wish to proceed with the process of creating an image, you can just pull out an image already present in the registry. Different use of the community may have uploaded this image.

- Run command from the client will run the docker image, and for the same, a docker container is created and used.

Why is It Recommended to Go for Docker?

Unlike virtual machines, docker does not induce version mismatches when running in different setups. There is no loss in the time and efforts spent by the developer in creating and running, unlike virtual machines. On a comparative tone between a virtual machine and a docker container, there are three parameters that decide its functionality:

1. Size:

the base of this comparison falls on the amount of resource that is utilized by Docker or virtual machines while running. Dockers comparatively uses lesser storage space, which can be reutilized for creating more containers while that is not the case with virtual machines.

2. Startup:

On the amount of boot time utilized, this comparison is made. Since the operation of the guest server starts from scratch, boot time is higher in the case of virtual machines.

3. Integration:

The basis of this comparison is the ability to integrate with other tools easily. DevOps tools in VM are very limited, and so is its functionality. However, in docker, several instances can be set, making its functionality easier.

Why are docker containers popular?

Containerization and Docker containers are very popular terms used invariably by techies around the globe. And it is identified to be equally useful with the number of features it has to offer which includes:

- It offers high flexibility in usage. Any type of complex application can be containerized too easily.

- Updates and upgrades on the deployment can be interchanged easily.

- The docker can be built locally, upgraded to be deployed to the cloud, and can be run anywhere. The portability feature of docker is very high.

- Container replicas can be created and scaled easily and increased with efficiency.

- On the fly- the docker services can be stacked vertically.

Features of docker

The docker facility allows users to condense the developed application size and assists in producing a smaller trail of the operating system when containers are used. Containers are a system that helps in interacting across the different sectors and borders of the company. With docker, different teams of an organization can correlate and work together easily.

Docker is featured with an option to run on any platform. It can be used locally, deployed, and tested on cloud platforms also. Further, even a hybrid environment can be used for running and testing the application. Further, scalability is not at all an issue with docker and deploying containers.

Docker is lightweight files, making it very cost-effective and space-friendly. Taking up lesser space, you can use the saved capacity for other work-related goals. It’s suitable for smaller to medium size deployments in work-related issues.

Getting started with the docker tutorial

Docker fundamentals are easier to handle, and you don’t have to be a pro for it. For getting started with docker, there are no prerequisites or skills required. A piece of basic knowledge of cloud services and web application development will help you through the process. However, it is not a mandatory thing. The entire docker tutorial for installation is listed out here.

- Docker can be supported across different OS platforms. Setting up docker on your computer with the required tooling can be easy. Initially, there were laid-back problems faced with OSX and windows which was them testified to make it work like never before. So start with the docker install. A prompt “hello world” proves to you that the installation is done correctly and your docker application is running fine.

- Before the actual installation, it’s good to ensure that the Linux kernel 3.8 above is installed on your personal computer. Docker is supported in such versions or higher. Install the OS with the latest versions helps you work better.

- The next step would be to add certifications needed for working the docker by installing them. Installing necessary packages can help in running easily and smoothly.

- Adding a GPG key will be the next step in the Docket tutorials for encrypting all the data.

Docker files

The file which contains the sequence of commands for creating a docker image is termed as docker file. These files are provided with a suitable functional name and are executed with a docker command; this results in the docker images. This setup after installation forms a part of the docker fundamentals and a quick start to learning docker. When the docker image is set to run using the “docker run” command, the application may start execution by itself locally or on the cloud as per requirement.

A docker hub, on the other hand, is a location to store all the docker images. A docker hub acts like a cloud registry that holds data of all uploaded docker images uploaded by several users of a community onto the cloud file. Even you can develop your own docker image that can be uploaded to the docker hub.

A docker composition lets you run several docker container files on a single server system efficiently.

Docker engine

To put it very simply, a docker engine acts as the heart of any docker system. A host system that is installed with a docker application is technically termed a docker engine.

- A long type of running process in docker termed is defined as a daemon process.

- The actual meaning of a client (CLI) is a command-line interface.

- For virtual communication between the CLI client and Docker daemon, a REST API is used.

Its like you read my mind! You seem to know

so much about this, like you wrote the book in it or

something. I think that you could do with

some pics to drive the message home a bit, but instead of

that, this is wonderful blog. A great read. I will certainly

be back.